How AI TRiSM Helps Reduce Risk From AI Models

Without trust, risk, and security baked in, AI is a ticking time bomb. Learn how AI TRiSM helps ensure AI model governance, trustworthiness, fairness, robustness, efficacy and data protection.

Published:

Last updated:

Finding it hard to keep up with this fast-paced industry?

It’s 2025 and AI is everywhere, revolutionizing industries as diverse as software development and renewable energy. The rapid level of innovation is exciting, but it comes with danger. Without trust, risk, and security baked in, AI is a ticking time bomb.

The explosion of generative AI increases productivity, but it also increases risk surface, whether via prompt injections, hallucinations, data leaks, or other novel issues. So, what can we do about this explosion in risk?

Enter: AI Trust, Risk and Security Management (AI TRiSM), a collection of technological solutions and techniques that, as Gartner defines it, “ensures AI model governance, trustworthiness, fairness, reliability, robustness, efficacy and data protection.”

Why now? Origins and terminology

Coined by Gartner in 2023, AI TRiSM solutions are quickly evolving to keep pace with rapid AI advancements and users’ increasing interest in deploying it safely and securely.

The term AI TRiSM was first featured in Gartner’s “Tackling Trust and Risk in AI Models” article. As the market has matured, this was expanded into a full Market Guide on AI TRiSM, published in February 2025.

AI TRiSM’s high level architecture

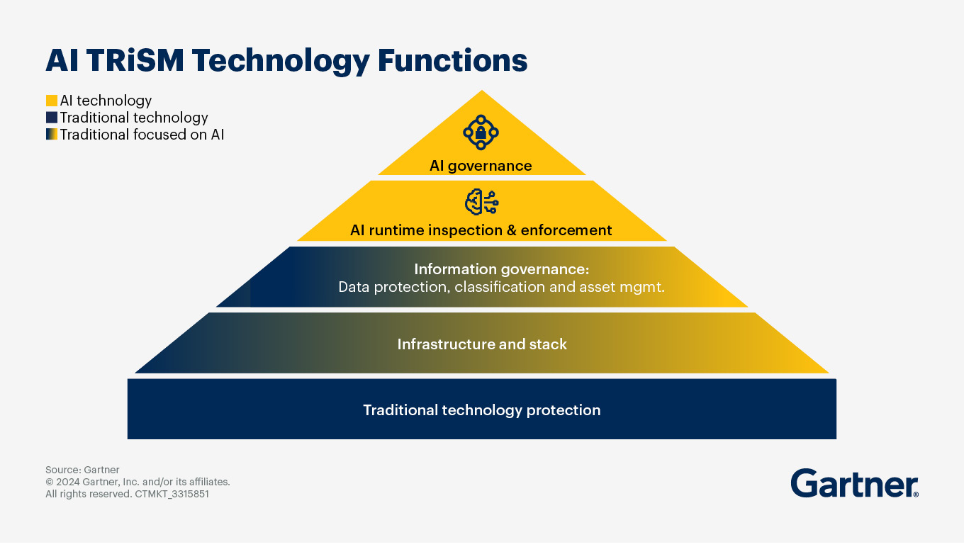

According to Gartner, AI TRiSM spans four technical layers:

- Traditional protection and infrastructure: Core IT and security foundations

- Data and information governance: Classification, privacy, access controls

- Runtime inspection and enforcement: In-the-moment monitoring and remediation

- AI governance: Model registration, approvals, and lifecycle control

The top two layers — AI governance and AI runtime inspection and enforcement — are new to AI and are, in part, consolidating into a distinct market segment. The bottom two layers represent traditional technology focused on AI.

AI TRiSM solutions give enterprises visibility into all of the AI models that are accessed and used in their networks. They also enable organizations to more safely use AI, ensure AI actions align with organizational intent, keep AI systems secure from malicious actors, and ensure confidential data and intellectual property are properly protected.

Mandatory features of AI TRiSM

According to the Gartner guidance released in February 2025, TRiSM solutions should include:

- AI catalog: An inventory of AI entities used in the organization. Think models, agents and applications in various configurations, ranging from embedded AI in off-the-shelf, third-party applications to bring-your-own AI, to retrieval-augmented generation systems, to first-party models.

- AI data mapping: Data used to train or fine-tune AI models, provide context for user queries in a Retrieval-augmented generation (RAG) system, or feed AI agentic systems.

- Continuous assurances and evaluation: of performance, reliability, security, or safety expectations and metrics that are used for baselines. Assurances and evaluations are applied pre-deployment, post-deployment (out of band).

- Runtime inspection and enforcement applied to models, applications and agent interactions to support transactional alignment with organizational governance policies. Applicable connections, processes, communications, inputs and outputs are inspected for violations of policies and expected behavior. Anomalies are highlighted and either blocked, auto-remediated or forwarded to humans or incident response systems for investigation, triage, response and applicable remediation.

AI TRiSM in the real-world

There are multiple use cases for implementing AI TRiSM in the deployment and management of enterprise AI. These include:

Government: Enforcing policy in AI grant decision systems

Government agencies are turning to AI for help in evaluating grant applications and distributing funds. But without proper oversight, these systems can become black boxes, raising concerns about fairness and accountability. An AI TRiSM program ensures that policies are embedded in the process, with explainability tools and audit logs that help agencies prove decisions were made responsibly and without bias.

Energy & critical infrastructure: Securing predictive maintenance from adversarial threats.

Utilities increasingly rely on AI models to predict equipment failure and optimize grid performance. But without strong controls, these models can be vulnerable to data poisoning or manipulation. An AI TRiSM program helps secure the entire lifecycle with data integrity checks, model monitoring, and runtime protections that keep the lights on and the system resilient.

Financial Services: Governing AI in fraud detection systems.

Banks use AI to detect suspicious activity in real time, but over time, models can drift, and decisions may become less explainable. An AI TRiSM program brings structure and governance to this process, ensuring models are continuously evaluated, decisions can be justified, and sensitive customer data is always protected by policy-backed controls.

Zooming in: Enabling trustworthy AI in a highly regulated enterprise

AI TRiSM solutions are especially important in highly regulated industries. Let’s take a look at how this might apply in real life with a look at a real (anonymized) example.

The challenge

A national health insurer was preparing to roll out Microsoft Copilot across internal departments. Like many large enterprises, the insurer was eager to adopt generative AI to improve employee productivity — automating claims reviews, surfacing policy documents, and drafting customer communications. But they quickly hit a wall. Legal and compliance teams raised red flags:

- Where is Copilot getting its data?

- What sensitive information might it expose?

- If something goes wrong, how do we prove governance?

They had policies around AI use, but lacked a way to enforce them, but had no AI system inventory, nor evidence trails to satisfy regulators. The risk was too high, and the rollout was paused.

At this point, they could have joined the vast majority of businesses who have attempted to implement new tools like Microsoft Copilot, only to find themselves stuck at the pilot phase. According to one study, only 6% of businesses reported moving to a large-scale deployment of Copilot.

The solution

With an AI TRiSM solution (in this case, the RecordPoint AI Governance Suite), the customer could move past the stalled rollout and put real AI governance into motion.

1: Move from policy-on-paper to operational oversight

The priorities to start with were:

- Create a live inventory of all AI systems, including shadow usage

- Link each tool to its data sources, model type, risk level, and owner

- Turn their static policy into real, trackable controls

With RexCommand, organizations can catalog all AI systems, including SaaS applications with built-in AI, across the business. From here, they can monitor usage, ownership, and model status. This level of oversight allows organizations to eliminate shadow AI and unauthorized deployments.

2: Enforce policy through automation and approval workflows

Before staff use any AI tool, it must pass governance checkpoints.

For example, is its training data classified and privacy-vetted? Is there a documented business purpose and owner? Has bias and fairness testing been completed?

How RexCommand helps:

RexCommand simplifies this process, allowing the customer to maintain control at every stage of the AI lifecycle. With RexCommand, they can apply policies consistently, automate risk reviews, and ensure governance is part of daily operations — not an afterthought.

- Automate governance policies across AI projects

- Trigger risk assessments with minimal manual effort

- Integrate seamlessly with existing workflows

Step 3: Provide audit-ready evidence of responsible AI

Each model interaction, approval, and update is tracked, so when auditors came calling, the team had a complete lineage of decisions, not just policy statements.

How RexCommand helps

With full oversight of all AI systems, the organization can now meet regulatory and industry standards with confidence. RexCommand streamlines documentation and reporting so you're always audit-ready.

- Align with frameworks like NIST, ISO 42001, GDPR, and the EU AI Act

- Maintain a full audit trail of decisions and approvals

- Export compliance reports in a click

Try RexCommand for free

Keen to get started? Sign up for RexCommand for free. And check out our full AI governance suite of solutions.

Discover Connectors

View our expanded range of available Connectors, including popular SaaS platforms, such as Salesforce, Workday, Zendesk, SAP, and many more.

Download the AI governance committee checklist

An AI governance committee is crucial to the success of secure, transparent AI within your organization. Use this quick checklist to learn how to get started.